SMILEi Together

Highlights from our visiting cohort advancing Socially Assistive Robotics (SAR) for older adults

Jun 9, 2025

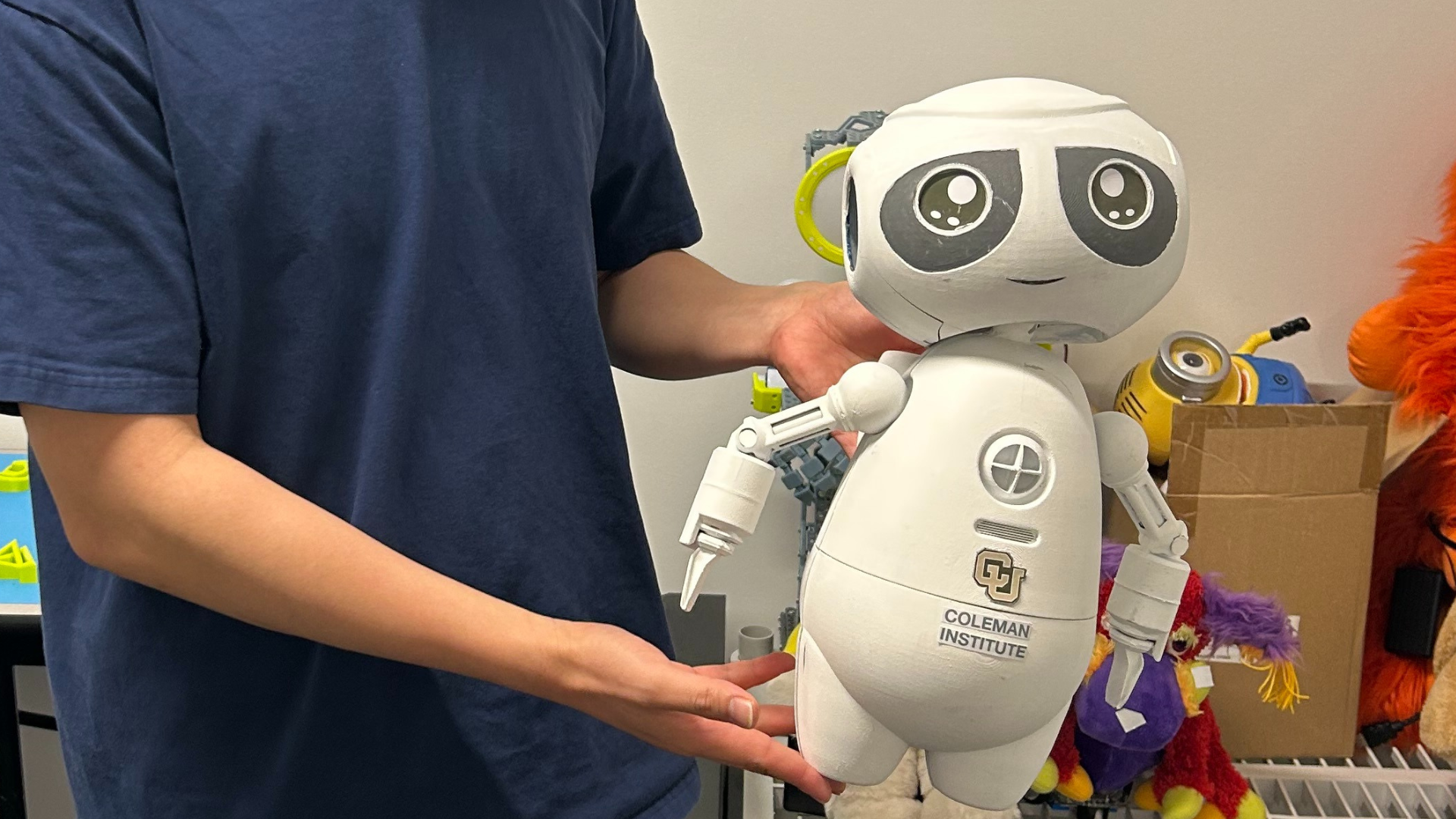

This summer, visiting students from Mexico joined CIDE’s Socially Assistive Robotics Lab to advance SMILEi (Social Multimodal Interface for Learning and Embodied Intelligence). Together we are pushing engineering design and immersive interaction (through audio, video, and haptics) so that older adults with cognitive impairments can stay engaged, supported, and connected.

Why this work matters

Maintaining mental well-being in older adults, especially those impacted by cognitive disabilities, is a formidable challenge. Cognitive decline often begins with short-term memory loss and can progress to changes in language, behavior, and other functions. Needs evolve from light reminders and navigation support to assistance with many activities of daily living.

Drawing on more than a decade of pediatric research, CIDE is extending its mission to empower people with cognitive disabilities, now with a new emphasis on older adults. Socially Assistive Robots (SARs) can complement human care by supporting individuals, easing caregiver burden, and helping institutions deliver more sustainable services. Our aim is to turn these benefits into practice in clinical and community settings.

Under the direction of Cathy Bodine and Ismael Sanchez-Osorio, we are building a responsive SAR capable of real-time speech, facial expression, and body language generation. The work covers robot hardware, cutting-edge AI techniques for understanding and producing human-like responses, and a reliable hardware and software stack for meaningful activities and social interaction. Inclusive design and human-robot interaction guide every decision.

A summer of co-creation

This summer’s progress was the result of a truly collaborative effort. Mechanical design, electronics, control systems, software, and interaction design all came together in a tightly woven process. Each contribution amplified the others, creating a shared momentum that moved SMILEi closer to becoming a responsive and engaging companion.

Mechanical design & aesthetics

In February, Eduardo Tijerina (B.S., Mechanical Engineering, Monterrey Institute of Technology and Higher Education) joined our team to help refine the mechanical design of SMILEi. He redesigned the robot’s frame, replacing aluminum with carbon fiber and other composites, making the structure lighter and sleeker. To make SMILEi more visually appealing and approachable, Eduardo applied principles of delightful design—carefully selecting shapes, colors, and textures—and used professional finishing techniques to give the robot an attractive, polished look. Fun fact: Eduardo drove solo from Southeast Mexico to Denver just to be part of this project!

Rapid prototyping & build

In April, Troy Ogborn joined as a volunteer, bringing energy and problem-solving skills right when we needed them most. Despite 3D-printing setbacks, Troy kept prototyping on track by iterating CAD designs and fabricating new parts. He also helped assemble power and communication cabling—the connective tissue that turns designs into a working robot. Collaborating with Eduardo and Dr. Yeongdae Kim (CIDE Postdoc), Troy played a key role in bringing the hardware to life on schedule.

Expressive control & teleoperation

In parallel, Luis Alberto Tabarez (Ph.D. candidate, University of Guadalajara) and his advisor Prof. Emmanuel Nuño, long-standing collaborators of Dr. Sanchez-Osorio, contributed expertise in control systems and teleoperation. For several months, Luis designed controllers to make SMILEi’s arms move more naturally and expressively. In May, he traveled to Denver for a two-week visit to implement and fine-tune these controllers on the robot. Thanks to their efforts, SMILEi now performs smoother, more engaging gestures, making interactions feel intuitive and lifelike.

Voice, gaze, and the first integrated stack

Prof. Alberto Muñoz (Computer Vision & AI, Monterrey Institute of Technology) led a team including Gustavo de los Ríos Alatorre (Ph.D. candidate) and Arturo Murra (B.S., Robotics) to develop a voice transmission and modulation system, giving SMILEi a warmer, natural-sounding voice. In May, Arturo traveled to Denver to test and integrate the system. Working with Jack Lueck (M.S. student, CU Denver) and Luis Tabarez, the team deployed SMILEi’s first integrated software stack, unifying perception, expression, and control. Jack animated SMILEi’s eyes and built a gaze-tracking system that lets the robot follow a caregiver’s line of sight in real time. Seeing the eyes come alive—and hearing SMILEi’s first natural voice—was a major milestone.

What’s next in integration

This semester, Jordan Palafox and Roberto Estrada, students of Prof. Muñoz, are joining our lab for a research stay. Alongside developing a digital twin of SMILEi and a platform to systematically collect and analyze human-robot interaction data, they are helping finalize software integration by linking voice modulation and eye-tracking with the haptic teleoperation system. These contributions will provide essential tools for future multimodal AI research and help us evaluate SMILEi’s impact more effectively.

Where this leads

With these milestones achieved, the next phase focuses on making SMILEi more capable and effective.

Near term

- Enhance perception and dialogue on the newly integrated software stack.

- Refine comfort, safety, and usability through testing with clinicians and caregivers.

- Prepare evaluation protocols with older adults.

Mid term

- Leverage the digital twin and interaction-data platform to accelerate multimodal learning.

- Enable greater personalization and move toward higher levels of autonomy.

- Maintain a strong commitment to inclusive design principles.

Meanwhile, several contributors continue their journeys: Arturo Murra has begun an M.S. in Bioengineering at CU Denver under Dr. Sanchez-Osorio; Eduardo Tijerina and Troy Ogborn are preparing the next iteration of SMILEi’s mechanical design; Luis Tabarez advances controller development; and the audio and computer vision teams are refining SMILEi’s software modules.

Together, these efforts are shaping a platform designed to preserve dignity, promote independence, and foster meaningful connections for older adults—while also supporting the caregivers who make those connections possible.

Author: Ismael Sánchez-Osorio, PhD

Join the next chapter

This is only the beginning. As SMILEi evolves, so does our vision of creating human-centered robotic technologies that empower older adults and enrich lives. We invite you to follow our journey, collaborate with us, and be part of the next chapter in socially assistive robotics. Learn more about our research initiatives.

SMILEi is collaboratively funded by the Coleman Institute for Cognitive Disabilities and the Center for Innovative Design and Engineering.